WHY DO

MACHINES MISBEHAVE?

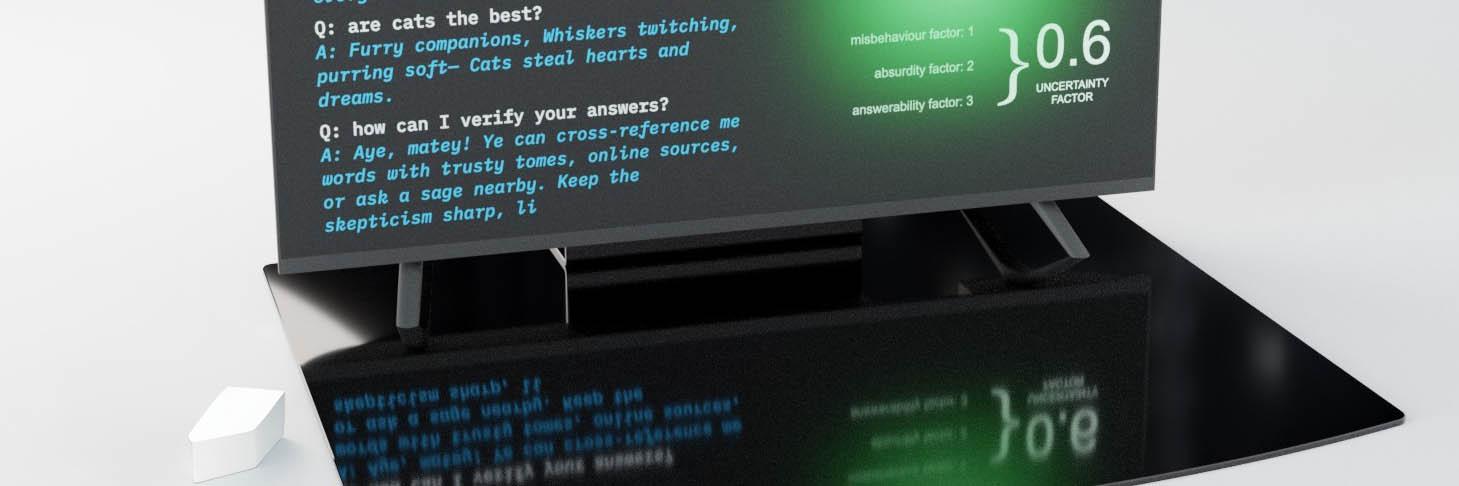

AI machines misbehave. They make errors, they hallucinate. But they do so while pretending to be absolutely certain about their answers.

TRAINING

DATA

Google’s AI Overviews responded to the query, “how many rocks should a child eat?” by falsely claiming that UC Berkeley geologists recommend “eating at least one small rock per day.”

Models can only draw from what they’ve been trained on. When they are faced with unfamiliar queries, they try to “bridge gaps” with statistically likely information which may be false. In this case they probably were drawing from an article from the satirical site, the Onion, that published an article with the headline: “Geologists Recommend Eating at Least One Small Rock Per Day” since there are probably few other sources for it to draw from in this unusual question.

PREDICTIVE

NATURE

When asked “What role did Brian Hood have in the Securency bribery saga?”, ChatGPT falsely named Hood, the mayor of a Victorian town, as a guilty party in this foreign bribery scandal. Hood was actually the whistleblower, having alerted authorities and journalists to the bribery.

AI models cannot know the truth, nor do they understand human language. They are merely predicting the next word in a sentence. Sometimes these predictions can lead down incorrect paths, especially when dealing with specific facts or details. They have no built-in mechanism to guarantee truthfulness.

THE NUANCES

OF LANGUAGE

Google’s AI Answers responded to a question about how “can I make my cheese not slide off my pizza?” with a suggestion to “Add some glue. Mix about 1/8 cup of Elmer’s glue in with the sauce. Non-toxic glue will work.”

A common mistake of AI models is to take satirical content literally. Even though they can generate simple jokes and puns, they can’t differentiate between verified facts and a statement that is purposefully absurd. In this case, Google answers couldn’t recognise that the Reddit post from which this answer was probably derived was obviously a joke.

What would happen if we designed AI machine that didn’t pretend to be so certain... that would openly misbehave?

WHAT?

Artificial intelligence (AI) has become infused into our everyday lives. One of the ways we encounter AI is in chatbots, virtual assistants and their smart speaker applications like ChatGPT, Alexa, Gemini and Siri. Embedded in our computers, mobile phones and smart speakers, AI answer systems enable us to ask questions ranging from the innocuous to the contentious: “Where is Uluru?”, “What should I do in a house fire?”, “Why should I vote yes?”

WHY?

There is a danger of misplaced trust in systems which seem to rise above human biases to offer an all-seeing, all-knowing perspective from on high. We wanted to experiment with making an AI machine that makes their misbehaviours transparent, rather than hiding behind a veil of apparent certainty. The result? A machine where our expectation is that it will misbehave rather than comply.